Although the topic is on everyone’s mind lately, I have been presenting my views on artificial intelligence at conferences for more than a decade, long before it became trendy. I developed my logo with AI, and I use AI tools in my work as well, so it is not that huge of a deal to me. I was not really planning on writing a post about it because:

1) there are already so many of them around;

2) I have done presentations including the subject for many of my clients for many years and I even mentioned it in one of my poems about technologies from Down to Earth, my poetry book that I published in 2021;

3) it is just not me to jump in and follow the herd just for the sake of getting some attention.

It is just that I read a recent article, which triggered me to change my mind and get at the keyboard.

The article was about the result of research carried out by Harvard Business School, Reskilling in the Age of AI. The part that I found quite interesting was that according to this research, artificial intelligence was to reduce the gap between mediocre consultants and the “elite” consultants. The mediocre ones saw a performance boost of 43% thanks to AI, while the performance increase for the top consultants was much more modest. My spontaneous reaction was to conclude that businesses should either work only with top quality consultants or just eliminate the middleman when it is one of the mediocre ones and just make the switch to AI themselves, which is kind of what I hinted at in the one of my answers when I set up my FAQ section many years ago.

Another anecdote that shapes my views on AI and digitalization is what happens if there is a slight mistake in an online order. If the system does not recognize something in the information submitted, then we are dealing with artificial stubbornness, which is second to none, not even the “natural” one. I am sure many of you have experienced the frustration of dealing with an automatized package tracking system, and the agony of finding a real person who might be able to fix the problem.

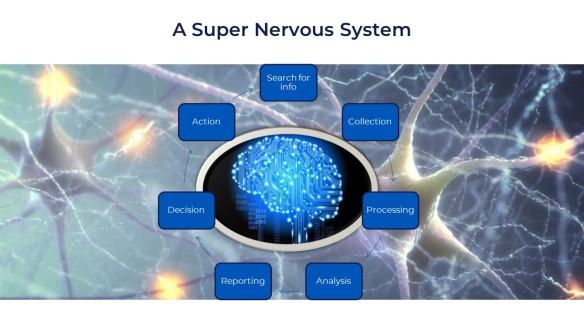

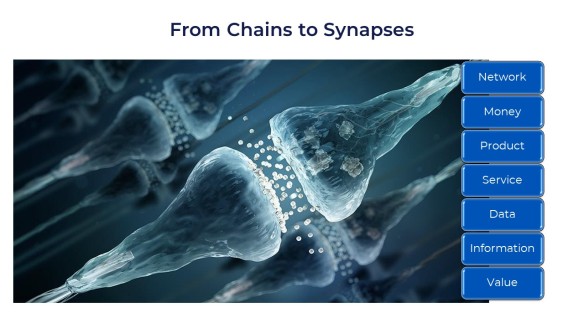

Let’s face it, AI is still in its infancy and there is a whole world to open up in the future. As an illustration, I post in this article two slides taken from some of my presentations, which were not about AI as such, but in which I indicated in what respect the current automation in food and agriculture differs so much from the previous mechanization from the 20th century and earlier. The “old” automation was basically to replace human -and animal- labour and allow one person to perform physical tasks that had required much more individuals before. Mechanization was really all about adding “muscle” to the farmer and the worker, and sometimes to replace them, too.

The 21st century automation, although still adds muscle, is much more about adding a nervous system. Satellites, sensors of all sorts, management software, robotics, driverless vehicles and the many new technologies, when combined, actually mimic the nervous system as we know it. There are limbs, contact organs, senses and nerves that transport and transmit information that the brain (the intelligence centre) will process and send instructions back to the entire system to take action in the field, in the factory, in the logistics or in the store or restaurant. As the flow of data is essential for effective performance, it is clear that the synapses are of the utmost importance for this artificial nervous system. This why all the new technologies must be looked at from a system point of view and how they interact with one another. Developing technologies independently is a mistake, as it will miss many points.

The 21st century automation, although still adds muscle, is much more about adding a nervous system. Satellites, sensors of all sorts, management software, robotics, driverless vehicles and the many new technologies, when combined, actually mimic the nervous system as we know it. There are limbs, contact organs, senses and nerves that transport and transmit information that the brain (the intelligence centre) will process and send instructions back to the entire system to take action in the field, in the factory, in the logistics or in the store or restaurant. As the flow of data is essential for effective performance, it is clear that the synapses are of the utmost importance for this artificial nervous system. This why all the new technologies must be looked at from a system point of view and how they interact with one another. Developing technologies independently is a mistake, as it will miss many points.

So, we are building a nervous system, and since it is in its infancy, the way forward is really to treat it as an infant and follow the same process and the same steps that are required to develop a new human being and bring it up into a well-functioning grown-up. First, it is important to develop its cognitive abilities by exposing it to many experiences as possible, under serious supervision, of course. This will help the development of the right connections and the right amount in the nervous system. That is essential for AI to be able to function and deal with new and unknown situations and problems that need to be solved. When I was a student, one of my teachers had defined intelligence as the ability to cope and overcome situations never met before. I like that definition. As the “subject” develops further, it will need to learn more and more and, of course, the way to learn is to get a solid education, which means gathering knowledge, understanding how the knowledge connects together, and to be able to exercise critical thinking, therefore discerning what are true facts from what is raving nonsense. The learning process must be built on serious sources and there, too, serious supervision is needed. Just like with education, the system needs to be tested for progress and when difficulties arise, there must be proper monitoring and tutoring to help the “student” achieve success. The “subject” is still young and can still be subject to bad influences that might undermine its ability to identify the correct information and reject the nonsense, and thus perform properly. Really, developing AI looks a lot like raising a child all the way into adulthood.

So, we are building a nervous system, and since it is in its infancy, the way forward is really to treat it as an infant and follow the same process and the same steps that are required to develop a new human being and bring it up into a well-functioning grown-up. First, it is important to develop its cognitive abilities by exposing it to many experiences as possible, under serious supervision, of course. This will help the development of the right connections and the right amount in the nervous system. That is essential for AI to be able to function and deal with new and unknown situations and problems that need to be solved. When I was a student, one of my teachers had defined intelligence as the ability to cope and overcome situations never met before. I like that definition. As the “subject” develops further, it will need to learn more and more and, of course, the way to learn is to get a solid education, which means gathering knowledge, understanding how the knowledge connects together, and to be able to exercise critical thinking, therefore discerning what are true facts from what is raving nonsense. The learning process must be built on serious sources and there, too, serious supervision is needed. Just like with education, the system needs to be tested for progress and when difficulties arise, there must be proper monitoring and tutoring to help the “student” achieve success. The “subject” is still young and can still be subject to bad influences that might undermine its ability to identify the correct information and reject the nonsense, and thus perform properly. Really, developing AI looks a lot like raising a child all the way into adulthood.

The user also must take proper action to adapt and grow together with the nervous system. The artificial brain may be much faster at processing data that a human brain but humans using AI must be able to assess whether the outcome of the data processing makes sense or not. We must be able to spot if something in the functioning of the system is wrong, should that happen, in order to stop it from causing further errors and damage. If you use ChatGPT, to name a popular AI system, to write an essay and you do not proofread it for errors, both in content and form, then you expose yourself to possible unpleasant consequences. Automation and AI are to do work with us, not instead of us. Our roles will change, but just as it was not acceptable before, laziness (OK, let’s call it complacency) cannot be acceptable in the future, either.

Of course, like with any innovation, we must make a clear distinction between tool and gadget. So, what is the difference between the two? A tool performs a task, which has a clearly define objective and a clearly defined result. It must be effective and efficient. A gadget is just for fun and distraction. Tools evolve to get more useful. Gadgets do not, and disappear when another gadget that is more entertaining comes along.

Earlier, I was pinpointing the need to discern good sources of knowledge and information from nonsense. The saying “you are what you eat” is actually rather appropriate when it comes to AI in its current form. Indeed, the data AI is fed on will strongly influence what it produces.

In the food and agriculture production, supply and distribution chain, using AI should normally use reliable data, as the data should originate from a well-known and reliable source. Although the data can be quite voluminous, it is limited to these reliable sources, often the data of the producers themselves. Therefore, and as a tool, AI will be quite useful to tackle all the challenges that the sector is facing now and for the future. If the AI system that you use picks its data and information from an uncontrolled source, like the internet, you must realize that unless it has very solid safeguards to discriminate between truth and falsehoods, it will end up compiling the good, the bad and the ugly and thus further spread poison. Therefore, close scrutiny and monitoring is of the highest importance.

Further, here is a video on the subject that I posted on my YouTube Channel:

Copyright 2023 – Christophe Pelletier – The Food Futurist – The Happy Future Group Consulting Ltd.